About Me

I am a first-year Computer Science Ph.D. student at the University of Central Florida, under the supervision of Dr. Chen Chen. I had the opportunity to serve as an intern at ByteDance, where I collaborated with Jie Wu from January 2022 to July 2023. Prior to that, I earned my Master’s degree from Xiamen University and my Bachelor’s degree from Hainan University.

My primary research interest is in Data-centric AI, aiming to explore the role and applications of data across a range of AIGC, pre-training, and downstream tasks. Specifically, I am investigating how to facilitate models with more efficient data or annotations and how to construct automated data pipelines for various tasks. Recently, I am working on how to use discriminative models and large multi-modal models to improve diffusion models.

Publications

(* indicates equal contribution; # indicates corresponding authorship.)

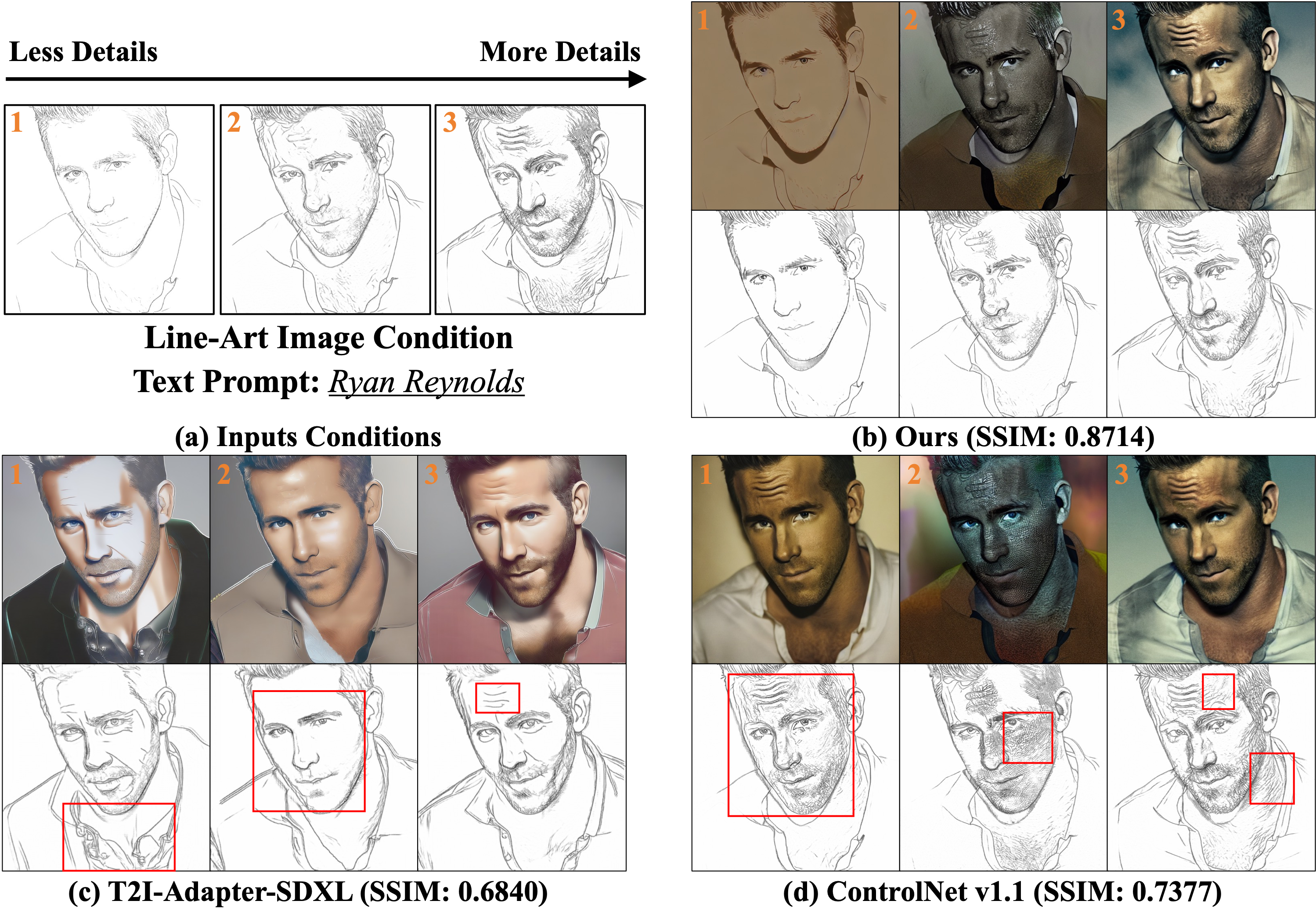

ControlNet++: Improving Conditional Controls with Efficient Consistency Feedback.

Ming Li, Taojiannan Yang, Huafeng Kuang, Jie Wu, Zhaoning Wang, Xuefeng Xiao, Chen Chen

arXiv 2024 [Website] [Code] [Demo]

- Existing efforts in controllable generation still perform poorly in controllability, with generated images deviating from input conditions.

- We show that pre-trained discriminative models can serve as powerful visual reward models to improve the controllability in a cycle-consistency manner.

- We disrupt the consistency between input images and conditions, and enable the single-step denoising for efficient reward fine-tuning.

- We provide a unified and public evaluation of controllability and demonstrate that ControlNet++ comprehensively outperforms existing methods.

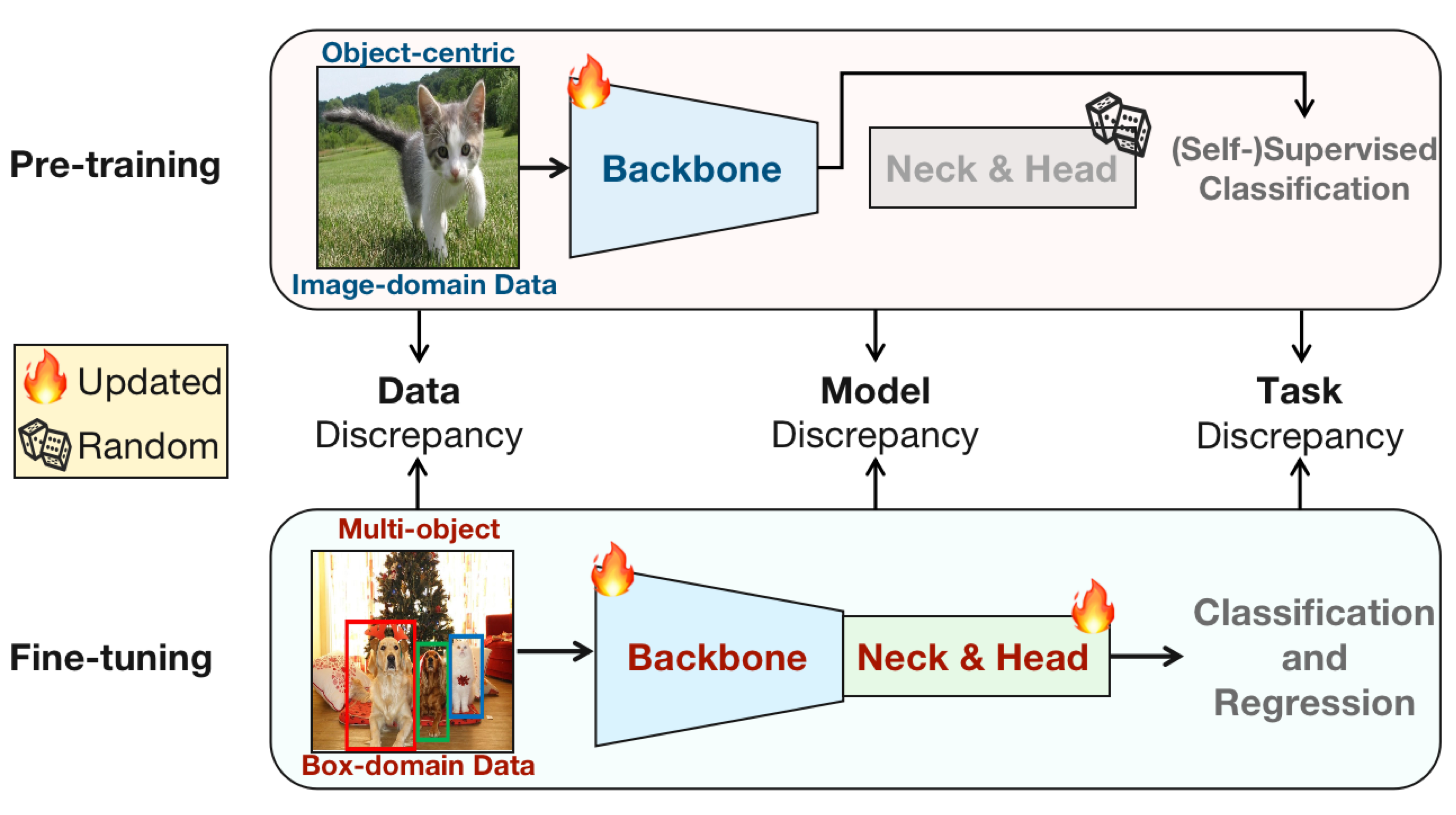

AlignDet: Aligning Pre-training and Fine-tuning in Object Detection.

Ming Li*, Jie Wu*#, Xionghui Wang, Chen Chen#, Jie Qin, Xuefeng Xiao, Rui Wang, Min Zheng, Xin Pan.

ICCV 2023 [Website] [Code] [Poster]

- We point out that existing detection algorithms are constrained by the data, model, and task discrepancies between pre-training and fine-tuning.

- We propose AlignDet to align these discrepancies, which constructs detection-oriented pre-training by learning classification and regression knowledge.

- AlignDet makes the first attempt to fully pre-train all kinds of detectors using a completely unsupervised paradigm, by integrating pre-trained backbones.

- AlignDet can achieve significant improvements across diverse protocols, such as detection algorithm, model backbone, data setting, and training schedule.

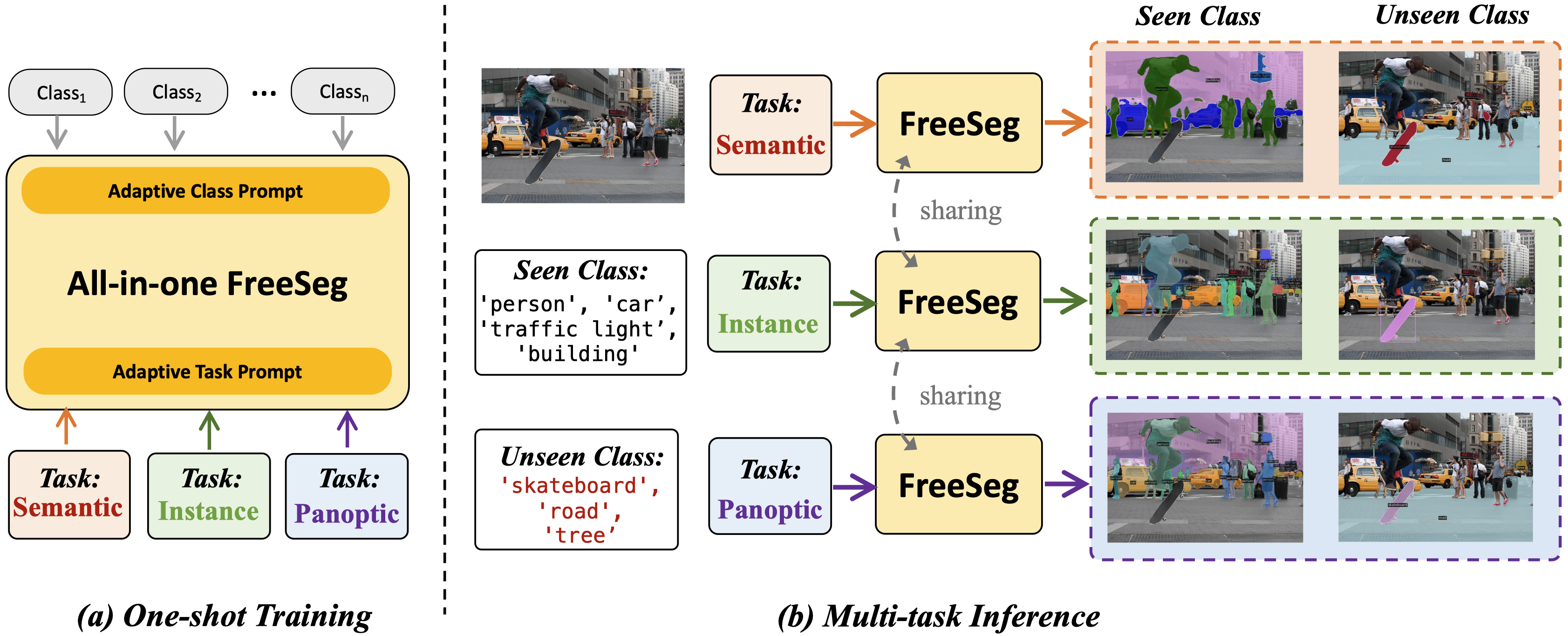

FreeSeg: Unified, Universal and Open-Vocabulary Image Segmentation.

Jie Qin*, Jie Wu*#, Pengxiang Yan, Ming Li, Ren Yuxi, Xuefeng Xiao, Yitong Wang, Rui Wang, Shilei Wen, Xin Pan, Xingang Wang#.

- We propose FreeSeg, a generic framework to accomplish Unified, Universal and Open-Vocabulary Image Segmentation.

- FreeSeg optimizes an all-in-one network via one-shot training and adaptive prompt learning, making a single model work for diverse segmentation tasks.

- FreeSeg establishes SOTA results on three Open-Vocabulary segmentation tasks, and outperforms the best task-specific architectures by a large margin.

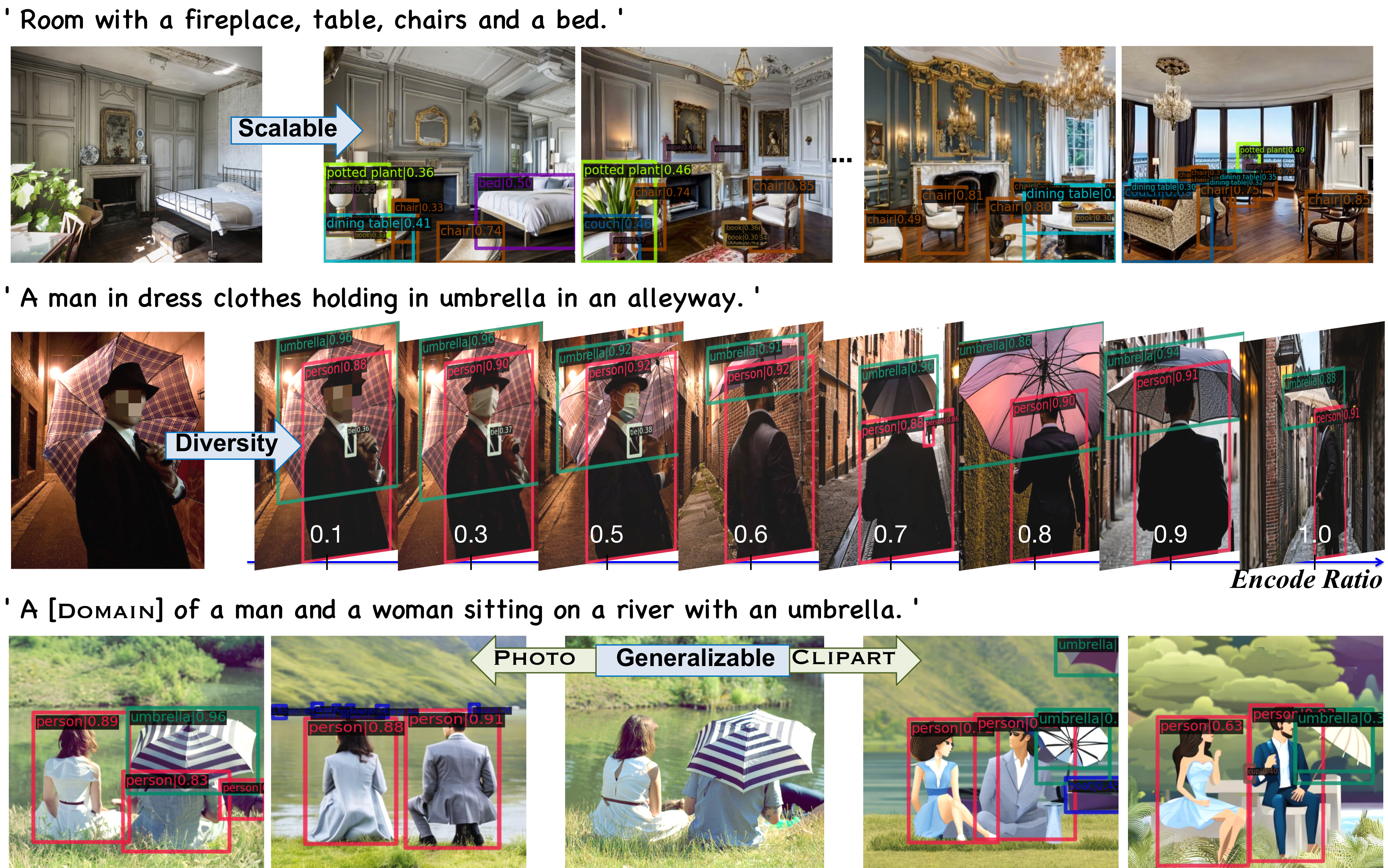

DiffusionEngine: Diffusion Model is Scalable Data Engine for Object Detection.

Manlin Zhang*, Jie Wu*#, Yuxi Ren*, Ming Li, Jie Qin, Xuefeng Xiao, Wei Liu, Rui Wang, Min Zheng, Andy J. Ma#.

- We reveal the Diffusion Model is a scalable data engine for object detection.

- We present DiffusionEngine to provide high-quality and diversity detection data, by a pre-trained diffusion model and an effective Detection-Adapter.

- We demonstrate data scaling-up via DiffusionEngine can achieve significant improvements in diverse scenarios, including various detection algorithms, self/semi-supervised pretraining, data/label scarce and cross-domain setting.

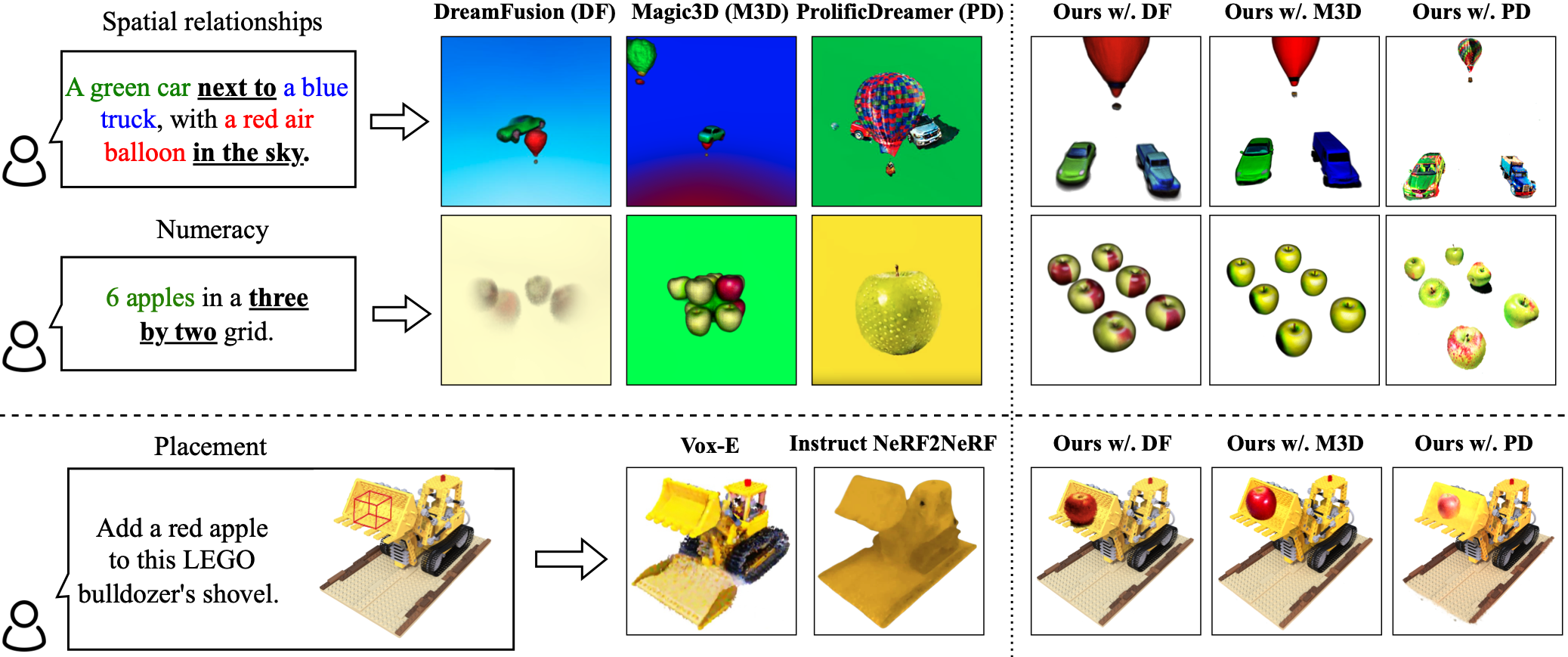

LucidDreaming: Controllable Object-Centric 3D Generation.

Zhaoning Wang, Ming Li, Chen Chen#.

arXiv 2023 [Website]

- We propose LucidDreaming, a plug-and-play framework to achieve controllable object-centric 3D generation with Large Language Models.

- We introduces clipped ray sampling and object-centric density bias initialization to generate multiple discrete 3D objects within single scene.

- LucidDreaming offers a standard in the dataset, evaluation metrics, and clear strategies for the development of controllable 3D generation.

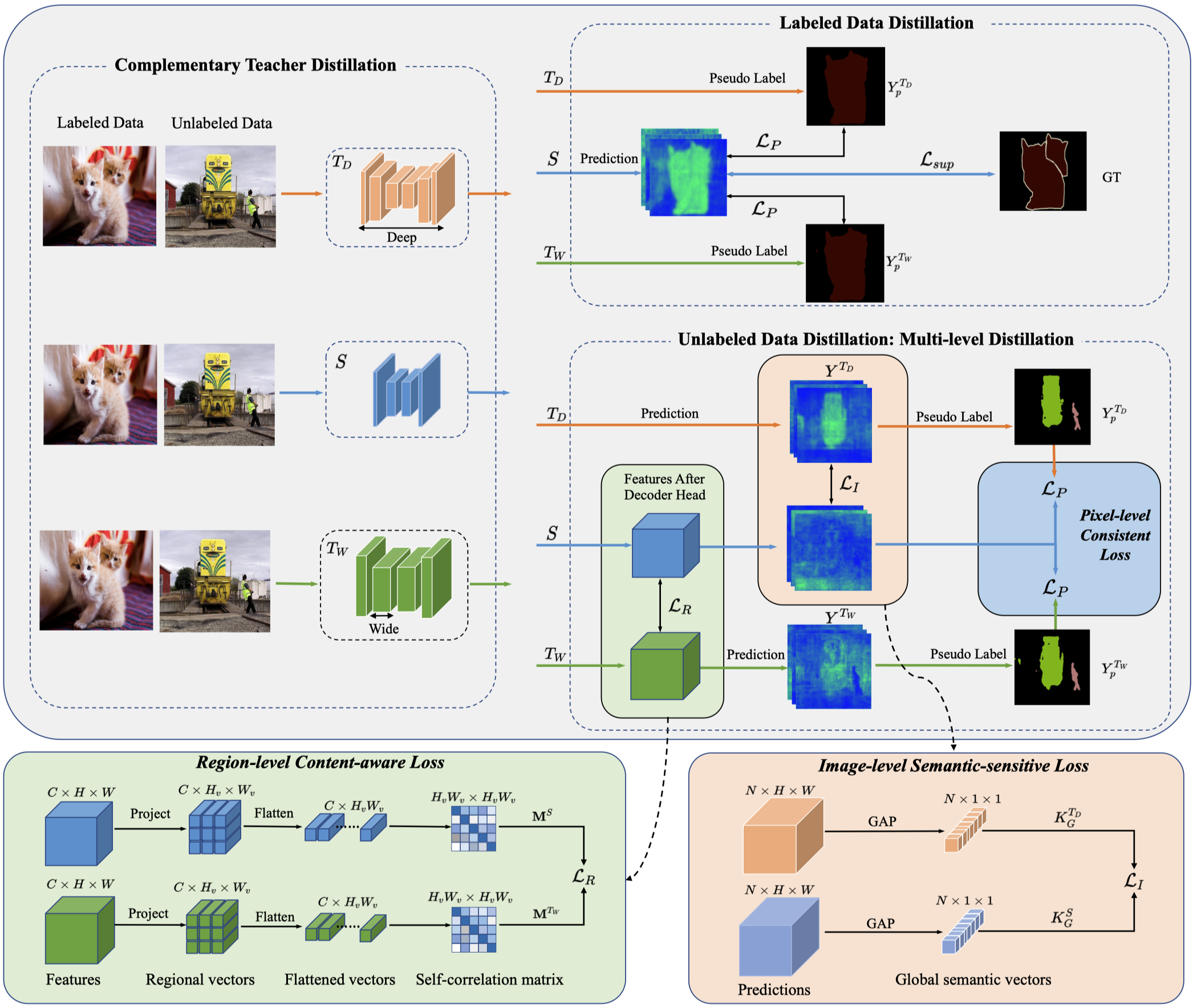

Multi-granularity Distillation Scheme Towards Lightweight Semi-supervised Semantic Segmentation.

Jie Qin*, Jie Wu*#, Ming Li, Xuefeng Xiao, Min Zheng, Xingang Wang#.

ECCV 2022 [Code]

- We offer the first attempt to obtain the lightweight model for semi-supervised semantic segmentation.

- We propose a multi-granularity distillation (MGD) to distill task-specific concepts from two complementary teacher models into a student one.

- The labeled-unlabeled data cooperative distillation, and the hierarchical loss paradigm facilitates the lightweight model with less annotations.

- MGD can outperform the competitive approaches by a large margin under diverse partition protocols with signigicant FLOPs reduction.

-

IL-NeRF: Incremental Learning for Neural Radiance Fields with Camera Pose Alignment.

Letian Zhang, Ming Li, Chen Chen, Jie Xu.

arXiv 2023 [Website] -

DLIP: Distilling Language-Image Pre-training.

Huafeng Kuang, Jie Wu, Xiawu Zheng, Ming Li, Xuefeng Xiao, Rui Wang, Min Zheng, Rongrong Ji.

arXiv 2023 -

First Place Solution to the CVPR’2023 AQTC Challenge: A Function-Interaction Centric Approach with Spatiotemporal Visual-Language Alignment.

Tom Tongjia Chen, Hongshan Yu, Zhengeng Yang, Ming Li, Zechuan Li, Jingwen Wang, Wei Miao, Wei Sun, Chen Chen.

CVPR 2023 Workshop [Challenge] [Code] -

First Place Solution to the CVPR’2022 AVA Challenge: Parallel Pre-trained Transformers for Synthetic Data-based Instance Segmentation.

Ming Li*, Jie Wu*#, Jinhang Cai, Jie Qin, Yuxi Ren, Xuefeng Xiao, Min Zheng, Rui Wang, Xin Pan.

CVPR 2022 Workshop [Challenge]

Internships

- 2024.05 - 2024.08, TikTok, ByteDance, San Jose, USA.

- 2022.01 - 2023.07, ByteDance, Shenzhen, China.

Honors

- Champion of CVPR 2023 Long-form Video Understanding and Generation Challenge (Track 3).

- ORCGS Doctoral Fellowship, the University of Central Florida. 2023.

- Champion of CVPR 2022 AVA Accessibility Vision and Autonomy Challenge.

- Excellent Graduation Thesis of Hainan University. 2020.

- China National Inspirational Scholarship. 2017 and 2018.

Educations

- 2023.09 - Now, Ph.D., Computer Science, University of Central Florida.

- 2020.09 - 2023.06, Master, Computer Science, Xiamen University.

- 2016.09 - 2020.06, Bachelar, Software Engineering, Hainan University.